Variables

What is a Hop variable?

You don’t want to hardcode your solutions. It’s simply bad form to hardcode host names, user names, passwords, directories and so on. Variables allow your solutions to adapt to a changing environment. If for example the database server is different when developing than it is when running in production, you set it as a variable.

| Fields, parameters, and variables implicitly are available downstream if in scope. You can pass them again any number of levels, but the "get parameters/variables" button only retrieves from one level above. |

Fields

Fields are columns in data row(s) and are viewable in some transform field textboxes and columns and you can see the fields in scope and its value when looking at transform row results after executing a workflow/pipeline (click the little grid icon on the bottom-right of a transform to see a preview of the cached results). Field values can be passed upstream for example if you use a pipeline executor and fill in the Result rows tab and use it in conjunction with a “copy rows to result” transform in the child pipeline.

Parameters

Think of parameters as function arguments, which turn into variables with the same name. When adding parameters you are basically creating MyPipeline(parameter1, parameter2,..). Parameters (e.g. in pipeline or workflow properties) need to be declared at least once in every pipeline or workflow.

For example, if you set parameters on a pipeline executor, the pipeline that is being called must declare the same parameter names in its pipeline properties (set to NULL if you want to inherit values). Parameters can not be “sent” upstream, but you can use a Set variables transform, but they become variables in the scope they are defined in.

Explicit vs Implicit: If you set a parameter to a default value, it becomes explicit (e.g. when editing a pipeline in pipeline properties), and it will take precedence over the same named implicit variable (a passed-in variable), but not take precedence over the same named explicit parameter. So whenever you see a transform with a column title “Parameters/Variables” that means an explicit parameter is sent to a function that will then set a variable name overriding any previous set parameter with the same name (useful if wanting to override an explicitly set param/variable in the child).

To change implicit parameter behaviour to explicit, you can also disable “pass all parameters” on the pipeline action. Parameters are simply variables which are explicitly defined in a workflow or pipeline to make them recognizable from outside those objects. They can also have a description and a default value.

You cannot combine implicit variable inheritance with explicit parameter definitions. So, if you add parameters to the pipeline definition (or to a pipeline executor) and you want it set, you must add it to the parameters tab of the child pipeline action/transform even if the same variable already exists.

Advanced: there are multiple layers in hop where you can set variables that do or do not get overwritten downstream (Java → hop environment → project → run configuration → workflow → pipeline).

Variables

Variables are more global and the scope can be targeted (entire Java VM, grandparent workflow, etc.) whereas fields are the data flowing between the transforms. Local variables can be set in a pipeline but should not be used in the same pipeline as they are not thread-safe and can inherit a previous value. It is better to send or return variables to another pipeline/workflow before using them. Variables can be passed upstream if the variable was set to a larger scope. E.g.: if a variable was set in a parent with "valid in the current workflow".

Think of variables in 2 scopes: runtime variables and environment/workflow variables:

-

Runtime variables – Runtime variables depend on pipeline information to generate, so they cannot be set beforehand, you need to declare those differently to be able to use them.

-

Environment/workflow variables or parameters – Environment variables/parameters are set once and used when needed in any downstream workflow/pipeline and there is no need to use Get Variables , you can refer to them directly like ${myVariable} unless you need it in a field/data stream.

-

E.g.: Define a parameter only once, even just in the Pipeline Executor (no need to define in receiving pipeline)

-

A pipeline needs to start to get new variables. A running or nested pipeline can’t fetch new variable values. A pipeline is considered started when a pipeline starts for every row in a pipeline executor. An alternative is to use parameters.

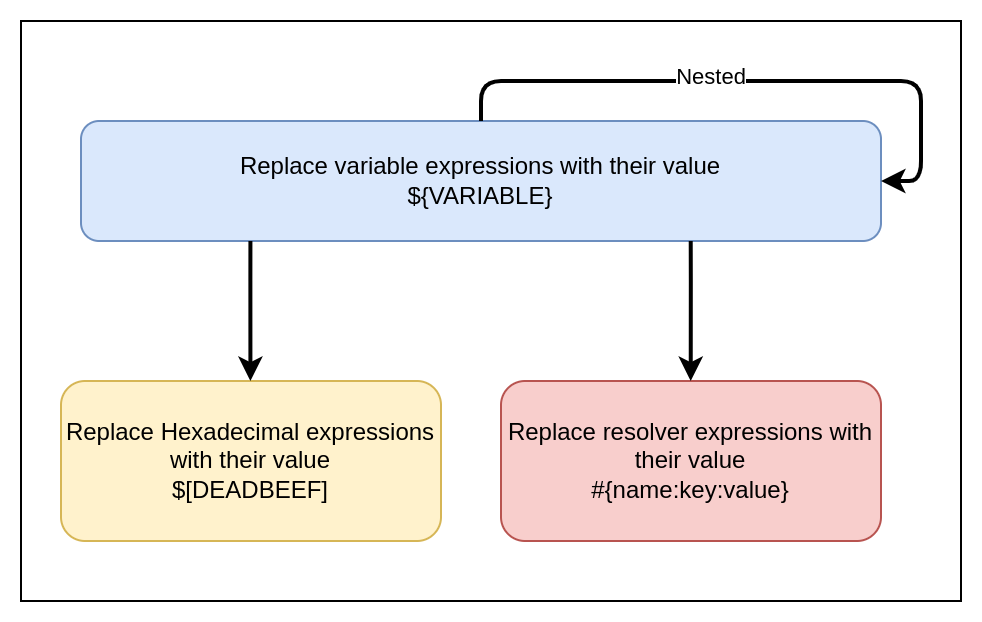

How do I use a variable?

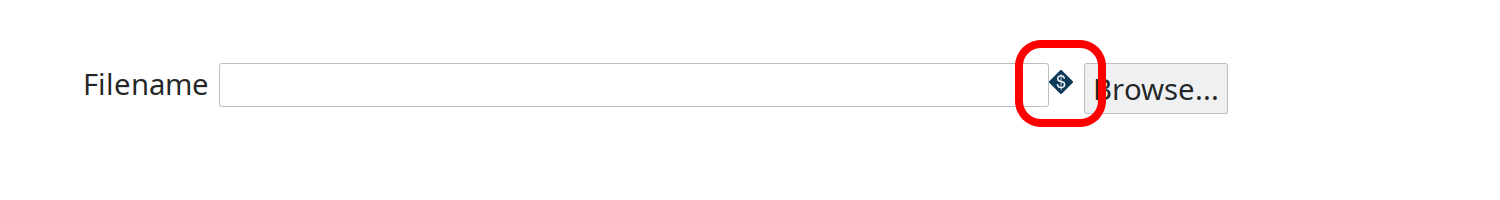

In the Hop user interface all places where you can enter a variable have a '$' symbol to the right of the input field:

You can specify a variable by referencing it between an opening ${ and a closing }.

${VARIABLE_NAME}Please note that in the case of a textvar (a text field that supports variables), you don’t have to specify a variable in these places. You can enter hard-coded values (although we advise to use variables where possible).

Combinations of a variable with other free-form text are also possible, like in the example below:

${ENVIRONMENT_HOME}/input/source-file.txt| You can see a list of defined variables by using CTRL-SPACE (CMD-SPACE on OSX) in the input field. This helper will insert a selected variable into the input field. Only environment variables and variables with the JVM scope are shown here. Variables that are created in pipeline or workflow with a parent, grant parent or root workflow job need to be entered manually |

Hexadecimal values

In rare cases you might have a need to enter non-character values as separators in 'binary' text files with for example a zero byte as a separator. In those scenarios you can use a special 'variable' format:

$[<hexadecimal value>]A few examples:

| Value | Meaning |

|---|---|

| A Newline character (CR) |

| A Newline character (LF) |

| A single byte with decimal value 0 |

| Two bytes with decimal value 65535 |

| The 2 bytes representing UTF-8 character Σ |

| The 3 bytes representing UTF-8 character ⌨ |

| The 4 bytes representing UTF-8 character 𝄞 |

Variable Resolvers

A variable resolver is a metadata element that allows us to find values using resolver plugins. The format to use to resolve variables using these metadata elements is the following:

#{name:key:element}For more information about how this works, go to the Variable Resolver page.

How can I define variables?

Variables can be defined and set in all the places where it makes sense:

-

in hop-config.json when it applies to the installation

-

in an environment configuration file when it concerns a specific lifecycle environment

-

In a project

-

As a default parameter value in a pipeline or workflow

-

Using the Set Variables transform in a pipeline

-

Using the Set Variables action in a workflow

-

When executing with Hop run

Locality

Variables are local to the place where they are defined.

This means that setting them in a certain place means that it’s going to be inherited from that place on.

This also means that’s important to know where variables can be set and used and the hierarchy behind it.

Hierarchy

| Place | Inheritance |

|---|---|

System properties | Inherited by the JVM and all other places in Hop |

Environment | Inherited by run configurations |

Run Configurations | Pipeline run configurations are inherited by the environment |

Pipeline | Inherited by the pipeline run configuration |

Workflow | Inherited by the workflow run configuration |

Metadata objects | Inherited by the place where it is loaded |

Parameters

Pipeline and workflows can (optionally) accept parameters.

Workflow and pipeline parameters are similar, and are a special type of variable that is only available within the current workflow or pipeline.

Workflow and pipeline parameters can have a default value and a description, and can be passed on from workflows and pipelines to other workflows and pipelines through a variety of workflow actions and pipeline transforms.

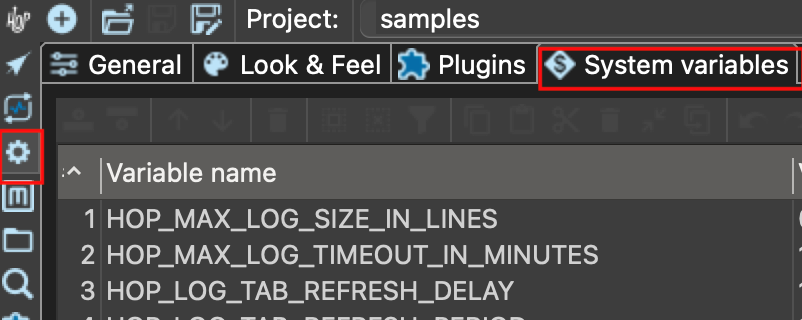

System properties

All system properties are variables that are available in all of your currently running Apache Hop instance. System properties are available as variables as well as all Java system properties.

System properties get set in the Java Virtual Machine that runs your Apache Hop instance. This means that you should limit yourself to only those variables which are really system specific.

You can add your own system variables to the ones that come with Apache Hop by default in the System Variables tab in the configuration perspective.

These variables will be written to the hop-config.json file in your hop installation’s config folder or in your ${HOP_CONFIG_FOLDER} location.

even though you can manually change the hop-config.json or any of the other configuration files, you’ll rarely, if ever, need to. Only change hop-config.json manually if you know what you’re doing. |

{

"systemProperties" : {

"MY_SYSTEM_PROPERTY" : "SomeValue"

}

}You can also use the hop-config command line tool to define system properties:

sh hop-conf.sh -s MY_SYSTEM_PROPERTY=SomeValueEnvironment Variables

You can specify variables in project lifecycle environments as well.

This helps you configure folders and other things which are environment specific, and lets you keep a clean separation between code (your project) and configuration (your environment).

You can set those in the environment settings dialog or using the command line:

sh hop-conf.sh -e MyEnvironment -em -ev VARIABLE1=value1Run Configurations

You can specify variables in pipeline run configurations and workflow run configurations to make a pipeline or workflow run in an engine agnostic way.

For example, you can have the same pipeline run on Hadoop with Spark and specify an input directory using hdfs:// and on Google DataFlow using gs://

Workflow

You can define variables in a workflow either with the Set Variables, JavaScript actions or by using parameters.

Pipelines

You can define variables in a pipeline either with the Set Variables, JavaScript transforms or by defining parameters.

IMPORTANT As mentioned in the Set Variables documentation page, you can’t set and use variables in the same pipeline, since all transforms in a pipeline run in parallel.

Available global variables

The following variables are available in Hop through the configuration perspective. If you have the menu toolbar enabled in the configuration perspective’s General tab, these variables are also available through Tools → Edit config variables.

| Variable name | Default Value | Description |

|---|---|---|

HOP_AGGREGATION_ALL_NULLS_ARE_ZERO | N | Set this variable to Y to return 0 when all values within an aggregate are NULL. Otherwise by default a NULL is returned when all values are NULL. |

HOP_AGGREGATION_MIN_NULL_IS_VALUED | N | Set this variable to Y to set the minimum to NULL if NULL is within an aggregate. Otherwise by default NULL is ignored by the MIN aggregate and MIN is set to the minimum value that is not NULL. See also the variable HOP_AGGREGATION_ALL_NULLS_ARE_ZERO. |

HOP_ALLOW_EMPTY_FIELD_NAMES_AND_TYPES | N | Set this variable to Y to allow your pipeline to pass 'null' fields and/or empty types. |

HOP_BATCHING_ROWSET | N | Set this variable to 'Y' if you want to test a more efficient batching row set. |

HOP_DEFAULT_BIGNUMBER_FORMAT | The name of the variable containing an alternative default bignumber format | |

HOP_DEFAULT_BUFFER_POLLING_WAITTIME | 20 | This is the default polling frequency for the transforms input buffer (in ms) |

HOP_DEFAULT_DATE_FORMAT | The name of the variable containing an alternative default date format | |

HOP_DEFAULT_INTEGER_FORMAT | The name of the variable containing an alternative default integer format | |

HOP_DEFAULT_NUMBER_FORMAT | The name of the variable containing an alternative default number format | |

HOP_DEFAULT_SERVLET_ENCODING | Defines the default encoding for servlets, leave it empty to use Java default encoding | |

HOP_DEFAULT_TIMESTAMP_FORMAT | The name of the variable containing an alternative default timestamp format | |

HOP_DISABLE_CONSOLE_LOGGING | N | Set this variable to Y to disable standard Hop logging to the console. (stdout) |

HOP_EMPTY_STRING_DIFFERS_FROM_NULL | N | NULL vs Empty String. If this setting is set to Y, an empty string and null are different. Otherwise they are not. |

HOP_FILE_OUTPUT_MAX_STREAM_COUNT | 1024 | This project variable is used by the Text File Output transform. It defines the max number of simultaneously open files within the transform. The transform will close/reopen files as necessary to insure the max is not exceeded |

HOP_FILE_OUTPUT_MAX_STREAM_LIFE | 0 | This project variable is used by the Text File Output transform. It defines the max number of milliseconds between flushes of files opened by the transform. |

HOP_GLOBAL_LOG_VARIABLES_CLEAR_ON_EXPORT | N | Set this variable to N to preserve global log variables defined in pipeline / workflow Properties → Log panel. Changing it to true will clear it when export pipeline / workflow. |

HOP_JSON_INPUT_INCLUDE_NULLS | Y | Name of te variable to set so that Nulls are considered while parsing JSON files. If HOP_JSON_INPUT_INCLUDE_NULLS is "Y" then nulls will be included otherwise they will not be included (default behavior) |

HOP_LENIENT_STRING_TO_NUMBER_CONVERSION | N | System wide flag to allow lenient string to number conversion for backward compatibility. If this setting is set to "Y", an string starting with digits will be converted successfully into a number. (example: 192.168.1.1 will be converted into 192 or 192.168 or 192168 depending on the decimal and grouping symbol). The default (N) will be to throw an error if non-numeric symbols are found in the string. |

HOP_LICENSE_HEADER_FILE | - | This is the name of the variable which when set should contains the path to a file which will be included in the serialization of pipelines and workflows |

HOP_LOG_MARK_MAPPINGS | N | Set this variable to 'Y' to precede transform/action name in log lines with the complete path to the transform/action. Useful to perfectly identify where a problem happened in our process. |

HOP_LOG_SIZE_LIMIT | 0 | The log size limit for all pipelines and workflows that don’t have the "log size limit" property set in their respective properties. |

HOP_LOG_TAB_REFRESH_DELAY | 1000 | The hop log tab refresh delay. |

HOP_LOG_TAB_REFRESH_PERIOD | 1000 | The hop log tab refresh period. |

HOP_MAX_ACTIONS_LOGGED | 5000 | The maximum number of action results kept in memory for logging purposes. |

HOP_MAX_LOGGING_REGISTRY_SIZE | 10000 | The maximum number of logging registry entries kept in memory for logging purposes. This is the number of logging objects, a logging object can be a pipeline/workflow/transform/action or a couple of system-level loggers. |

HOP_MAX_LOG_SIZE_IN_LINES | 0 | The maximum number of log lines that are kept internally by Hop. Set to 0 to keep all rows (default) |

HOP_MAX_LOG_TIMEOUT_IN_MINUTES | 1440 | The maximum age (in minutes) of a log line while being kept internally by Hop. Set to 0 to keep all rows indefinitely (default) |

HOP_MAX_TAB_LENGTH | - | A variable to configure Tab size |

HOP_MAX_WORKFLOW_TRACKER_SIZE | 5000 | The maximum age (in minutes) of a log line while being kept internally by Hop. Set to 0 to keep all rows indefinitely (default) |

HOP_PASSWORD_ENCODER_PLUGIN | Hop | Specifies the password encoder plugin to use by ID (Hop is the default). |

HOP_PIPELINE_ROWSET_SIZE | - | Name of the environment variable that contains the size of the pipeline rowset size. This overwrites values that you set pipeline settings |

HOP_PLUGIN_CLASSES | A comma delimited list of classes to scan for plugin annotations | |

HOP_ROWSET_GET_TIMEOUT | 50 | The name of the variable that optionally contains an alternative rowset get timeout (in ms). This only makes a difference for extremely short lived pipelines. |

HOP_ROWSET_PUT_TIMEOUT | 50 | The name of the variable that optionally contains an alternative rowset put timeout (in ms). This only makes a difference for extremely short lived pipelines. |

HOP_S3_VFS_PART_SIZE | 5MB | The default part size for multi-part uploads of new files to S3 (added and used by by the AWS S3 VFS plugin) |

HOP_SERVER_DETECTION_TIMER | - | The name of the variable that defines the timer used for detecting server nodes |

HOP_SERVER_JETTY_ACCEPTORS | A variable to configure jetty option: acceptors for Hop Server | |

HOP_SERVER_JETTY_ACCEPT_QUEUE_SIZE | A variable to configure jetty option: acceptQueueSize for Hop Server | |

HOP_SERVER_JETTY_RES_MAX_IDLE_TIME | A variable to configure jetty option: lowResourcesMaxIdleTime for Hop Server | |

HOP_SERVER_OBJECT_TIMEOUT_MINUTES | 1440 | This project variable will set a time-out after which waiting, completed or stopped pipelines and workflows will be automatically cleaned up. The default value is 1440 (one day). |

HOP_SERVER_REFRESH_STATUS | - | A variable to configure refresh for Hop server workflow/pipeline status page |

HOP_SPLIT_FIELDS_REMOVE_ENCLOSURE | N | Set this variable to N to preserve enclosure symbol after splitting the string in the Split fields transform. Changing it to true will remove first and last enclosure symbol from the resulting string chunks. |

HOP_SYSTEM_HOSTNAME | You can use this variable to speed up hostname lookup. Hostname lookup is performed by Hop so that it is capable of logging the server on which a workflow or pipeline is executed. | |

HOP_TRANSFORM_PERFORMANCE_SNAPSHOT_LIMIT | 0 | The maximum number of transform performance snapshots to keep in memory. Set to 0 to keep all snapshots indefinitely (default) |

HOP_USE_NATIVE_FILE_DIALOG | N | Set this value to Y if you want to use the system file open/save dialog when browsing files |

HOP_ZIP_MAX_ENTRY_SIZE | - | A variable to configure the maximum file size of a single zip entry |

HOP_ZIP_MAX_ENTRY_SIZE_DEFAULT_STRING | ||

HOP_ZIP_MAX_TEXT_SIZE | - | A variable to configure the maximum number of characters of text that are extracted before an exception is thrown during extracting text from documents |

HOP_ZIP_MAX_TEXT_SIZE_DEFAULT_STRING | - | |

HOP_ZIP_MIN_INFLATE_RATIO | - | A variable to configure the minimum allowed ratio between de- and inflated bytes to detect a zipbomb |

HOP_ZIP_MIN_INFLATE_RATIO_DEFAULT_STRING | - | |

NEO4J_LOGGING_CONNECTION | Set this variable to the name of an existing Neo4j connection to enable execution logging to a Neo4j database. |

Environment variables

Set the environment variables listed below in your operating system to configure Hop’s startup behavior:

- HOP_AUDIT_FOLDER

-

Set this variable to a valid path on your machine to store Hop’s audit information. This information includes last opened files per project, zoom size and lots more.

- HOP_CONFIG_FOLDER

-

Hop stores your configuration in the

configfolder by default. Set this environment variable to point to a folder outside of your Hop installation to keep your configuration, projects and environment list etc, no matter which Hop version or installation you use.

copy the contents of an existing hop/conf folder to the path set in HOP_CONFIG_FOLDER to move the configuration from one of your Hop installations to your new central location. |

- HOP_PLUGIN_BASE_FOLDERS

-

Set this variable to point Hop to a comma separated list of folders where you want Hop to look for additional plugins.

When using this variable it will also unset your default plugins folder, make sure to add the default plugin folder to the comma separated list. This can be a relative path to the installation eg. export HOP_PLUGIN_BASE_FOLDERS=./plugins,/additional/plugin/folder.The ./plugins will point to the plugins in the base installation folder |

- HOP_SHARED_JDBC_FOLDERS

-

This is a comma separated list of folder containing JDBC drivers, default value is lib/jdbc. If you still need the default jdbc drivers when changing this you need to include the default folder path.

Additionally, the following environment variables can help you to add even more fine-grained configuration for your Apache Hop installation:

| Variable | Default | Description |

|---|---|---|

HOP_AUTO_CREATE_CONFIG | N | Set this variable to 'Y' to automatically create config file when it’s missing. |

HOP_METADATA_FOLDER | - | The system environment variable pointing to the alternative location for the Hop metadata folder |

HOP_REDIRECT_STDERR | N | Set this variable to Y to redirect stderr to Hop logging. |

HOP_REDIRECT_STDOUT | N | Set this variable to Y to redirect stdout to Hop logging. |

HOP_SIMPLE_STACK_TRACES | N | System wide flag to log stack traces in a simpler, more human-readable format |

Internal variables

| Variable | Default | Description |

|---|---|---|

${Internal.Workflow.Filename.Folder} | N | The full directory path (folder) where the current workflow (.hwf) file is stored. This is useful for dynamically referencing the location of workflow files, especially when working across different environments or directories. |

${Internal.Workflow.Filename.Name} | N | The name of the current workflow file (.hwf) without the folder path or extension. Useful for logging or dynamically referencing the workflow name in tasks. |

${Internal.Workflow.Name} | N | The name of the current workflow as defined within the project, not the filename. This can be used to document or log workflow execution dynamically. |

${Internal.Workflow.ID} | N | The unique ID of the current workflow execution. Useful for tracking execution instances in logs or within dynamic workflows. |

${Internal.Workflow.ParentID} | N | The unique ID of the parent workflow if the current workflow was started by another workflow. This is helpful for tracing parent-child workflow relationships in logging. |

${Internal.Entry.Current.Folder} | N | The folder where the currently running action (entry) resides. Useful for organizing logs or resources dynamically based on where actions are executed from. |

${Internal.Pipeline.Filename.Directory} | N | The full directory path where the current pipeline (.hpl) file is located. Useful when building dynamic file paths or organizing files relative to the pipeline. |

${Internal.Pipeline.Filename.Name} | N | The name of the current pipeline file (.hpl) without the folder path or extension. Useful for logging or referencing the pipeline name in scripts and configuration. |

${Internal.Pipeline.Name} | N | The name of the current pipeline as defined within the project. This can be used for tracking or logging pipeline executions dynamically. |

${Internal.Pipeline.ID} | N | The unique ID of the current pipeline execution. This ID is useful for referencing and tracking execution instances in logs or external systems. |

${Internal.Pipeline.ParentID} | N | The unique ID of the parent pipeline if the current pipeline was started by another pipeline. Useful for tracking parent-child relationships between pipelines. |

${Internal.Transform.Partition.ID} | N | The ID of the partition in a partitioned transform. It allows users to track or log data partitions during parallel processing. |

${Internal.Transform.Partition.Number} | N | The partition number for partitioned processing in a transform. This is useful for distributing data processing tasks across multiple instances. |

${Internal.Transform.Name} | N | The name of the currently executing transform within a pipeline. It helps in logging and identifying which transform is performing certain actions during execution. |

${Internal.Transform.CopyNr} | N | The number of the transform copy that is executing. When transforms are run in parallel, this variable helps differentiate between the instances of the transform. |

${Internal.Transform.ID} | N | The unique ID of the transform instance. Useful for tracking transform execution and debugging. |

${Internal.Transform.BundleNr} | N | The bundle number for partitioned execution, helpful in load-balancing or distributing data across partitions. |

${Internal.Action.ID} | N | The unique ID of the current action (entry) in a workflow. Useful for tracking specific actions within a larger workflow. |