Running the Apache Beam samples

Prerequisites

The steps on this page and the detail pages for Spark, Flink and Google Dataflow will set up everything you need to run the pipelines in the Hop samples project.

Java

You’ll already have Java installed to run Apache Hop. Both Apache Hop and Beam require a Java 11 environment.

Double-check your java version with the java -version command. Your output should look similar to the one below.

openjdk version "11.0.15" 2022-04-19

OpenJDK Runtime Environment Homebrew (build 11.0.15+0)

OpenJDK 64-Bit Server VM Homebrew (build 11.0.15+0, mixed mode)the samples project

The Hop samples project comes with a number of sample pipelines for Apache Beam. Your default Hop installation comes with the samples project by default. If your Hop installation doesn’t come with this project, create a new project and point its Home folder to <HOP>/config/projects/samples.

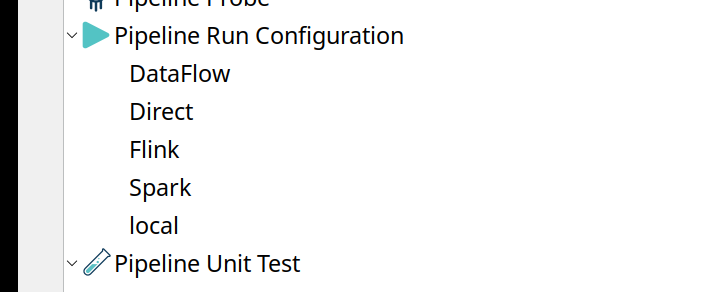

The Samples project contains the following pipeline run configurations

-

local: the native local run configuration. We’ll be ignoring this run configuration in the context of this guide.

-

Dataflow: the Apache Beam run configuration for Google Cloud Dataflow.

-

Direct: the direct runner Apache Beam run configuration. The Direct Runner executes pipelines on your machine and is designed to validate that pipelines adhere to the Apache Beam model as closely as possible. Instead of focusing on efficient pipeline execution, the Direct Runner performs additional checks to ensure that users do not rely on semantics that are not guaranteed by the model.

-

Flink: the Apache Beam run configuration for Apache Flink.

-

Spark:the Apache Beam run configuration for Apache Spark.

Build your Hop Fat Jar

Apache Beam requires a so-called fat jar that bundles all required Java classes and their dependencies into a single jar file.

Build this jar for your Hop installation through Tools → Generate a Hop fat jar.

Save this file in a convenient location and file name. Either store this outside of your project folder or add it to your .gitignore. You don’t want to accidentally add hundreds of MB to your git repository.

Flink and Spark: export your project metadata

You’ll need to pass your project’s metadata to JSON to pass it to either spark-submit or flink run.

Export your project metadata through Tools → Export metadata to JSON.

Save this file in a convenient location and file name. Either store this outside of your project folder or add it to your .gitignore. Your project’s metadata folder should already be in version control , you don’t want to add this full metadata point-in-time export once again.