Concepts

Tools

- Hop Conf

Hop Conf is a command line tool to manage various aspects of your Hop configuration: projects, environments, cloud configuration and more

- Hop Encrypt

Hop Encrypt is a command line tool that obfuscates or encrypts a plain text password for use in XML, password or metadata files. Make sure to also copy the password encryption prefix to indicate the obfuscated nature of the password. Hop will then be able to make the distinction between regular plain text passwords and obfuscated ones.

- Hop Gui

Hop Gui is the visual IDE where Hop data developers create, test, run and manage the life cycle for workflows and pipelines. In addition to functionality for development and life cycle management, Hop Gui contains tools and perspectives to manage projects and environments, to search and manage metadata, to manage and version control a large variety of files and to explore logging in a Neo4j graph.

- Hop Run

Hop Run is a command line tool to run workflows and pipelines, with options to (list or) specify projects, environments, properties and run configurations.

- Hop Search

Hop Search is a command line tool to search all metadata available in a specific project or environment.

- Hop Server

Hop Server is a web service interface to manage and run workflows and pipelines.

- Hop Translate

Hop Translator is a gui tool that allows non-technical users to translate Hop in their native language.

Item types

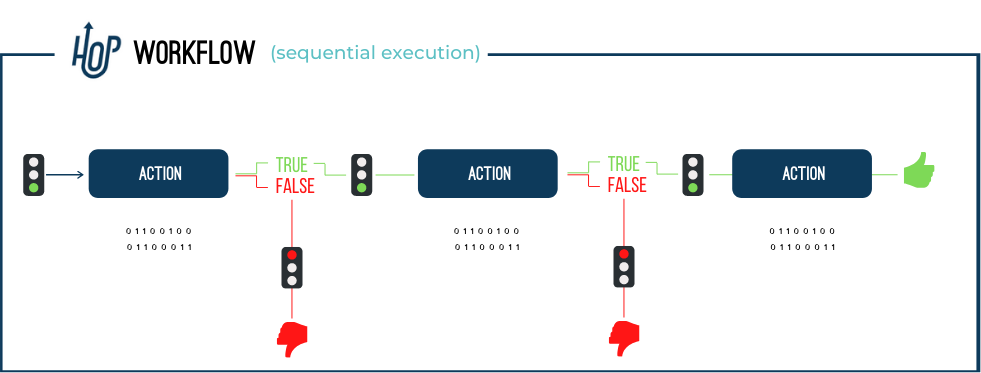

- Action

An Action is one operation performed in a Workflow. Actions are executed sequentially by default, with parallel execution as a configuration option. An Action returns a true or false exit code, which can be used (or ignored) in the Workflow’s execution.

- Hop

A Hop links Actions in a Workflow or Transforms in a Pipeline. In Workflows, Hops operate based on the exit status of previous Actions, Hops in Pipelines pass data between Transforms.

- Pipeline

Pipelines are the actual data workers. Operations in a Pipeline read, modify, enrich, clean and write data. Orchestration of Pipelines is done through othere Pipelines and/or Workflows.

- Transform

A Transform is a unit of work performed in a Pipeline. Typical Transform operations are reading data from files, databases, performing lookups or joins, enriching, cleaning data and more. All transforms in a Pipeline are executed in parallel. Transforms process data and move batches of processed data on Hops for processing by subsequent Actions.

- Workflow

A Workflow is a sequence of operations that are performed sequentially by default (with optional parallel execution). Workflows usually do not operate on the data directly, but perform orchestration tasks. Typical tasks in a Workflow consist of retrieving and archiving data, sending emails, error handling etc. )

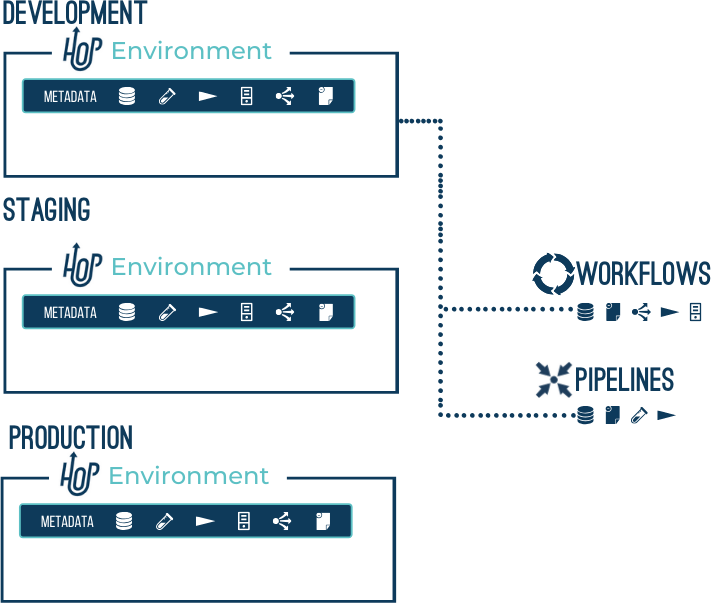

Projects and Environments

- Project

Hop Projects are a conceptual grouping of configurations, variables, metadata objects and workflows and pipelines. Projects can inherit metadata from parent projects. A project contains one or more environments where the actual configuration is defined.

Example: a 'Sales' project contains a 'customers' database connection and a number of workflows and pipelines. The runtime configurations, database connection properties etc are defined in the 'dev', 'uat' and 'prd' environments.

- Environment

Hop Environments are instances of projects that hold the actual runtime configurations and other metadata objects for a project.

Example: the 'dev' environment for the 'Sales' project specifies to read from host '10.0.0.1' for the 'customers' database connection

Metadata

Hop Metadata is the central storage repository for shared metadata like relational database connections, run configurations, servers, git repositories and so on. Metadata is persisted as json and is stored by default in a project’s base folder.

Various

The following items are an alphabetically ordered list of concepts that are used throughout Hop and will be mentioned at various locations in the Hop tools and documentation.

- Lazy Loading

If enabled, all data conversions (character decoding, data conversion, trimming, …) for the data being read will be postponed as long as possible, effectively reading the data as binary fields. Enabling lazy conversion can significantly decrease the CPU cost of reading data.

When to avoid: if the data conversion needs to be performed later in the stream anyway, postponing the conversion may slow things down instead of speeding up.

When to use: use cases where Lazy Conversion may speed things up when 1) data is read and written to another file without conversion, 2) data needs to be sorted and doesn’t fit in memory. In this case, serialization to disk is faster with lazy conversion because encoding and type conversions are postponed, or 3) bulk-loading to database without the need for data conversion. Bulk loading utilities typically read text directly and the generation of this text is faster (this does not apply to Table Output).